Endianness

Endianness refers to how data is ordered in machine language, the simplest, most understandable code that a computer can use.

In computer coding, all data (information) is stored in memory as small numbers (bytes). Larger numbers use more bytes to be stored. The different orders how they are can stored are called little-endian and big-endian. Which one is used depends on the type of the computer.

Say that we have a large number (32 bit long) like this:

0A0B0C0D ---> 0A | 0B | 0C | 0D

The way it's stored in shorter byte-size (each 8 bit long) computer memory right now is big-endian, because we are starting with the big end of the large number. (Note that this number is in hexadecimal (base-16) ).

There are two important things to know here: first, "end" here does not mean "the last", but rather "side". In other words, "big-endian" means something like "the big side first", not "the big number is at the finish". Second, in computing numbers are usually grouped into bytes which hexadecimal uses two digits to write out. Each group is treated as a single thing and the digits within do not switch.

To write it in little-endian, we simply start on the little end, so it becomes:

0A0B0C0D ---> 0D | 0C | 0B | 0A

Note that it is the order that changes, and the number does not become D0C0B0A0.

Endianness Media

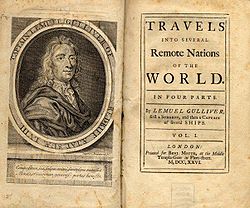

Gulliver's Travels by Jonathan Swift, the novel from which the term was coined