Statistics

Statistics is a branch of applied mathematics that deals with collecting, organizing, analyzing, reading and presenting data.[1][2][3] Descriptive statistics make summaries of data.[4][5] Inferential statistics makes predictions.[6] Statistics helps in the study of many other fields, such as science, medicine,[7] economics,[8][9] psychology,[10] politics[11] and marketing.[12] Someone who works in statistics is called a statistician. In addition to being the name of a field of study, the word "statistics" can also mean numbers that are used to describe data or relationships.

History

The first known statistics are census data. The Babylonians did a census around 3500 BC, the Egyptians around 2500 BC, and the Ancient Chinese around 1000 BC. Several mathematicians during the Islamic Golden Age studied statistical inference, mainly for use in cryptanalysis.

Starting in the 16th century, mathematicians such as Gerolamo Cardano developed the probability theory;[13][14][15][16][17] this led statistics closer to being a science. Since then, people have collected and studied statistics on many things. Trees, starfish, stars, rocks, words, almost anything that can be counted has been a subject of statistics.

Collecting data

Before we can describe the world with statistics, we must collect data. The data that we collect in statistics are called measurements. After we collect data, we use one or more numbers to describe each observation or measurement. For example, suppose that we want to find out how popular a certain TV show is. We can pick a group of people (called a sample) out of the total population of viewers. Then we ask each viewer in the sample how often they watch the show. The sample is data that one can see, and the population is data that one cannot see (assuming that not every viewer in the population are asked). For another example, if we want to know whether a certain drug can help lower blood pressure, we could give the drug to people for some time and measure their blood pressure before and after.

Descriptive and inferential statistics

Numbers that describe the data one can see are called descriptive statistics. Numbers that make predictions about the data one cannot see are called inferential statistics.

Descriptive statistics involves using numbers to describe features of data. For example, the average height of women in the United States is a descriptive statistic: it describes a feature (average height) of a population (women in the United States).

Once the results have been summarized and described, they can be used for prediction. This is called inferential statistics. As an example, the size of an animal is dependent on many factors. Some of these factors are controlled by the environment, but others are by inheritance. A biologist might therefore make a model that says that there is a high probability that the offspring will be small in size—if the parents were small in size. This model probably allows to predict the size in better ways than by just guessing at random. Testing whether a certain drug can be used to cure a certain condition or disease is usually done by comparing the results of people who are given the drug against those who are given a placebo.

Methods

Most often, we collect statistical data by doing surveys or experiments. For example, an opinion poll is one kind of survey. We pick a small number of people and we choose questions to ask them. Then, we use their answers as the data. This process of choosing which data to collect is called choosing a measurement; and the process of collecting data (in this case, who to ask) is called sampling.

Samples

The choice of how to collect data is important; that choice can change the values that are seen. Suppose we want to measure the water quality of a big lake. If we take samples next to the waste drain, we will get different results than if the samples are taken in a far-away and hard-to-reach spot of the lake.

There are two kinds of problems which are commonly found when taking samples:

- If there are many samples, the samples will likely be very close to what they are in the real population. If there are very few samples, however, they might be very different from what they are in the real population. This error is called sampling error (see also Errors and residuals in statistics).

- If chosen samples only account for one part of the population (like samples near the drain the beginning of the section), the samples might be very different from what they really are in the total population. This is true even if a great number of samples is taken. This kind of error is called bias.

Errors

We can reduce chance errors by taking a larger sample, and we can avoid some bias by choosing randomly. However, sometimes large random samples are hard to take. And bias can happen if different people are not asked, or refuse to answer our questions, or if they know they are getting a fake treatment. These problems can be hard to fix. See standard error for more.

Descriptive statistics

Finding one value to act in place of multiple data values

Often, people find it easier to decrease the numbers that make up a set of data and work with a single number instead; many people think that the best number to choose is a number in the middle of the data (a "typical" value of the population). This number in the middle of the data is called its central tendency. There are three kinds of central tendencies that are often used: the mean (sometimes called the average), the median and the mode.

The examples below use this sample data:

| Name | A | B | C | D | E | F | G | H | I | J |

| Score | 23 | 26 | 49 | 49 | 57 | 64 | 66 | 78 | 82 | 92 |

Mean

The formula for the commonly used mean (the so-called "arithmetic mean") is[18]

[math]\displaystyle{ \bar x = \frac{1}{N}\sum_{i=1}^N x_i = \frac{x_1+x_2+\cdots+x_N}{N} }[/math]

Where [math]\displaystyle{ x_1, x_2, \ldots, x_N }[/math] are the data and [math]\displaystyle{ N }[/math] is the population size (see also Sigma Notation).

This means that one calculates the mean by adding up all the values, and then divide by the number of values. For the example above, the mean is:

[math]\displaystyle{ \bar x = (23+26+49+49+57+64+66+78+82+92)/10 = 58.6 }[/math]

The problem with the mean is that it is affected by very large or very small values. In statistics, these extreme values might be errors of measurement, but sometimes the population really does contain these values. If a set of data has one of these values, then the mean may not be similar to most or all of the original values. For example, if there are 10 people in a room who make $10 per day and 1 who makes $1,000,000 per day, then the mean of the data is $90,918 per day.

The average also does not work if a set of data includes multiple groups that are much different that each other. In a room of 10 people with five people who make $10 and five people who make $100 per day, the average of these data is $55 per day.

In both cases, the mean is not the amount any single person makes; this fact makes the mean not very useful for some purposes.

Other kinds of means exist, like the geometric mean; other means are useful for other purposes.

Median

The median is the middle item of the data. For a given data [math]\displaystyle{ X }[/math], this is sometimes written as [math]\displaystyle{ \widetilde{X} }[/math].[18] To find the median, we sort the data from the smallest number to the largest number, and then choose the number in the middle. If there is an even number of data, there will not be a number right in the middle, so we choose the two middle ones and calculate their mean. In our example above, there are 10 items of data, the two middle ones are "57" and "64", so the median is (57+64)/2 = 60.5.

As another example, like the income example presented for the mean, consider a room with 10 people who have incomes of $10, $20, $20, $40, $50, $60, $90, $90, $100, and $1,000,000. Here, the median is $55, because $55 is the average of the two middle numbers, $50 and $60. If the extreme value of $1,000,000 is ignored, the mean is $53. In this case, the median is close to the value obtained when the extreme value is thrown out. The median solves the problem of extreme values as described in the first example in the definition of mean above.

However, the median still does not work as well for data made of multiple groups. In a room of 10 people with five people who make $10 and five people who make $100 per day, the median of these data is still $55 per day, which is still not similar to most of the people in the room.

Mode

The mode is the most frequent item of data. For example, if there are 10 people in a room with incomes of $10, $20, $20, $40, $50, $60, $90, $90, $90, $100, and $1,000,000, then the mode is $90, because $90 occurs three times and all other values occur fewer than three times.

There can be more than one mode. For example, if there are 10 people in a room with incomes of $10, $20, $20, $20, $50, $60, $90, $90, $90, $100, and $1,000,000, the modes are $20 and $90. This is bi-modal, or has two modes. Bi-modality is very common, and it often indicates that the data is the combination of two different groups. For instance, the average height of all adults in the U.S. has a bi-modal distribution. This is because males and females have separate average heights of 1.763 m (5 ft 9 + 1⁄2 in) for men and 1.622 m (5 ft 4 in) for women. These peaks are apparent when both groups are combined.

The mode is the only form of average that can be used for data that can not be put in order.

Finding the spread of the data

Another thing we can say about a set of data is how spread out it is. A common way to describe the spread of a set of data is the standard deviation. If the standard deviation of a set of data is small, then most of the data is very close to the average. If the standard deviation is large, though, then a lot of the data is very different from the average.

The standard deviation of a sample is generally different from the standard deviation of its originating population . Because of that, we write [math]\displaystyle{ \sigma }[/math] for population standard deviation, and [math]\displaystyle{ s }[/math] for sample standard deviation.[18]

If the data follows the common pattern called the normal distribution, then it is very useful to know the standard deviation. If the data follows this pattern (we would say the data is normally distributed), about 68 of every 100 pieces of data will be off the average by less than the standard deviation. Not only that, but about 95 of every 100 measurements will be off the average by less than two times the standard deviation, and about 997 in 1000 will be closer to the average by less than three standard deviations.

Other descriptive statistics

We also can use statistics to find out that some percent, percentile, number, or fraction of people or things in a group do something or fit in a certain category.

For example, social scientists used statistics to find out that 49% of people in the world are males.

Related software

In order to support statisticians, many statistical software have been developed:

Statistics Media

Scatter plots and line charts are used in descriptive statistics to show the observed relationships between different variables, here using the Iris flower data set.

Confidence intervals: the red line is true value for the mean in this example, the blue lines are random confidence intervals for 100 realizations.

In this graph the black line is probability distribution for the test statistic, the critical region is the set of values to the right of the observed data point (observed value of the test statistic) and the p-value is represented by the green area.

Bernoulli's Template:Langr was the first work that dealt with probability theory as currently understood.

Carl Friedrich Gauss made major contributions to probabilistic methods leading to statistics.

Karl Pearson, a founder of mathematical statistics

gretl, an example of an open source statistical package

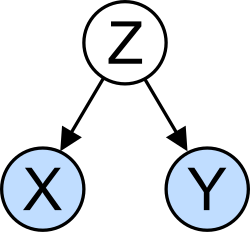

The confounding variable problem: X and Y may be correlated, not because there is causal relationship between them, but because both depend on a third variable Z. Z is called a confounding factor.

References

- ↑ DeGroot, M. H., & Schervish, M. J. (2012). Probability and statistics. Pearson Education.

- ↑ Johnson, R. A., Miller, I., & Freund, J. E. (2000). Probability and statistics for engineers (Vol. 2000, p. 642p). London: Pearson Education.

- ↑ Walpole, R. E., Myers, R. H., Myers, S. L., & Ye, K. (1993). Probability and statistics for engineers and scientists (Vol. 5). New York: Macmillan.

- ↑ Dean, Susan; Illowsky, Barbara. "Descriptive Statistics: Histogram". cnx.org. Retrieved 2020-10-13.

- ↑ Larson, M. G. (2006). Descriptive statistics and graphical displays. Circulation, 114(1), 76-81.

- ↑ Asadoorian, M. O., & Kantarelis, D. (2005). Essentials of inferential statistics. University Press of America.

- ↑ Lang, T. A., Lang, T., & Secic, M. (2006). How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. ACP Press.

- ↑ Wonnacott, T. H., & Wonnacott, R. J. (1990). Introductory statistics for business and economics (Vol. 4). New York: Wiley.

- ↑ Newbold, P., Carlson, W. L., & Thorne, B. (2013). Statistics for business and economics. Boston, MA: Pearson.

- ↑ Aron, A., & Aron, E. N. (1999). Statistics for psychology. Prentice-Hall, Inc.

- ↑ Fioramonti, D. L. (2014). How numbers rule the world: The use and abuse of statistics in global politics. Zed Books Ltd..

- ↑ Rossi, P. E., Allenby, G. M., & McCulloch, R. (2012). Bayesian statistics and marketing. John Wiley & Sons.

- ↑ Chow, Y. S., & Teicher, H. (2003). Probability theory: independence, interchangeability, martingales. Springer Science & Business Media.

- ↑ Feller, W. (2008). An introduction to probability theory and its applications (Vol. 2). John Wiley & Sons.

- ↑ Durrett, R. (2019). Probability: theory and examples (Vol. 49). Cambridge University Press.

- ↑ Jaynes, E. T. (2003). Probability theory: The logic of science. Cambridge University Press.

- ↑ Chung, K. L., & Zhong, K. (2001). A course in probability theory. Academic Press.

- ↑ 18.0 18.1 18.2 "List of Probability and Statistics Symbols". Math Vault. 2020-04-26. Retrieved 2020-10-13.

- ↑ Cho, M., & Martinez, W. L. (2014). Statistics in Matlab: A primer (Vol. 22). CRC Press.

- ↑ Martinez, W. L. (2011). Computational statistics in MATLAB®. Wiley Interdisciplinary Reviews: Computational Statistics, 3(1), 69-74.

- ↑ Crawley, M. J. (2012). The R book. John Wiley & Sons.

- ↑ Dalgaard, P. (2008). Introductory statistics with R. Springer.

- ↑ Maronna, R. A., Martin, R. D., & Yohai, V. J. (2019). Robust statistics: theory and methods (with R). John Wiley & Sons.

- ↑ Ugarte, M. D., Militino, A. F., & Arnholt, A. T. (2008). Probability and Statistics with R. CRC Press.

- ↑ Bruce, P., Bruce, A., & Gedeck, P. (2020). Practical Statistics for Data Scientists: 50+ Essential Concepts Using R and Python. O'Reilly Media.

- ↑ Kruschke, J. (2014). Doing Bayesian data analysis: A tutorial with R, JAGS, and Stan. Academic Press.

- ↑ Khattree, R., & Naik, D. N. (2018). Applied multivariate statistics with SAS software. SAS Institute Inc..

- ↑ Wagner III, W. E. (2019). Using IBM® SPSS® statistics for research methods and social science statistics. Sage Publications.

- ↑ Pollock III, P. H., & Edwards, B. C. (2019). An IBM® SPSS® Companion to Political Analysis. Cq Press.

- ↑ Babbie, E., Wagner III, W. E., & Zaino, J. (2018). Adventures in social research: Data analysis using IBM SPSS statistics. Sage Publications.

- ↑ Aldrich, J. O. (2018). Using IBM® SPSS® Statistics: An interactive hands-on approach. Sage Publications.

- ↑ Stehlik-Barry, K., & Babinec, A. J. (2017). Data Analysis with IBM SPSS Statistics. Packt Publishing Ltd.

- ↑ Parametric & Nonparametric Data Analysis for Social Research: IBM SPSS, Hary Gunarto. LAP Academic Publishing. 2019. ISBN 978-6200118721.

Other websites

![]() Media related to Statistics at Wikimedia Commons

Media related to Statistics at Wikimedia Commons